|

| | UTF8Encoding () |

| |

| | UTF8Encoding (bool encoderShouldEmitUTF8Identifier) |

| |

| | UTF8Encoding (bool encoderShouldEmitUTF8Identifier, bool throwOnInvalidBytes) |

| |

| override int | GetByteCount (char[] chars, int index, int count) |

| |

| override int | GetByteCount (string chars) |

| |

| unsafe override int | GetByteCount (char *chars, int count) |

| |

| override int | GetBytes (string s, int charIndex, int charCount, byte[] bytes, int byteIndex) |

| |

| override int | GetBytes (char[] chars, int charIndex, int charCount, byte[] bytes, int byteIndex) |

| |

| unsafe override int | GetBytes (char *chars, int charCount, byte *bytes, int byteCount) |

| |

| override int | GetCharCount (byte[] bytes, int index, int count) |

| |

| unsafe override int | GetCharCount (byte *bytes, int count) |

| |

| override int | GetChars (byte[] bytes, int byteIndex, int byteCount, char[] chars, int charIndex) |

| |

| unsafe override int | GetChars (byte *bytes, int byteCount, char *chars, int charCount) |

| |

| override string | GetString (byte[] bytes, int index, int count) |

| |

| override Decoder | GetDecoder () |

| |

| override Encoder | GetEncoder () |

| |

| override int | GetMaxByteCount (int charCount) |

| |

| override int | GetMaxCharCount (int byteCount) |

| |

| override byte[] | GetPreamble () |

| |

| override bool | Equals (object value) |

| |

| override int | GetHashCode () |

| |

| virtual object | Clone () |

| |

| virtual byte[] | GetBytes (char[] chars) |

| |

| virtual byte[] | GetBytes (char[] chars, int index, int count) |

| |

| virtual byte[] | GetBytes (string s) |

| |

| virtual int | GetBytes (ReadOnlySpan< char > chars, Span< byte > bytes) |

| |

| virtual char[] | GetChars (byte[] bytes) |

| |

| virtual char[] | GetChars (byte[] bytes, int index, int count) |

| |

| virtual int | GetChars (ReadOnlySpan< byte > bytes, Span< char > chars) |

| |

| unsafe string | GetString (byte *bytes, int byteCount) |

| |

| string | GetString (ReadOnlySpan< byte > bytes) |

| |

| virtual string | GetString (byte[] bytes) |

| |

Definition at line 10 of file UTF8Encoding.cs.

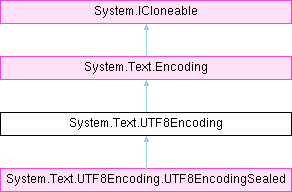

Inheritance diagram for System.Text.UTF8Encoding:

Inheritance diagram for System.Text.UTF8Encoding: